Building a Movie Recommendation Agent

January 26th, 2026

In this blog post, you're going to learn what went into building movieagent.io, an agent for movie recommendations. You'll only need some basic knowledge of LLMs, agents, and vector math.

The Friday Night Problem

Most Friday nights and weekends, my wife and I would spend 30+ minutes scrolling Netflix, vetoing each other's movie suggestions. The intersection of our tastes is somewhat small. She's more into rom-coms and the occasional thriller and couldn't care less about ratings. I gravitate towards critically acclaimed stuff and 3h+ sagas. Eventually, we would settle on something neither of us really wanted, or a comfort TV show.

I felt like there was only one way to get out of this bind - build a multi-user multi-agent movie recommendation system. And with that, the nerd was sniped.

Designing the Conversation

I wanted to have a fast, interactive movie recommendation experience that would find the best pick on a given night. The agent should play the role of an arbiter, trying its best to bridge the gap in our tastes. The goal was to make the conversation flow as smoothly as possible.

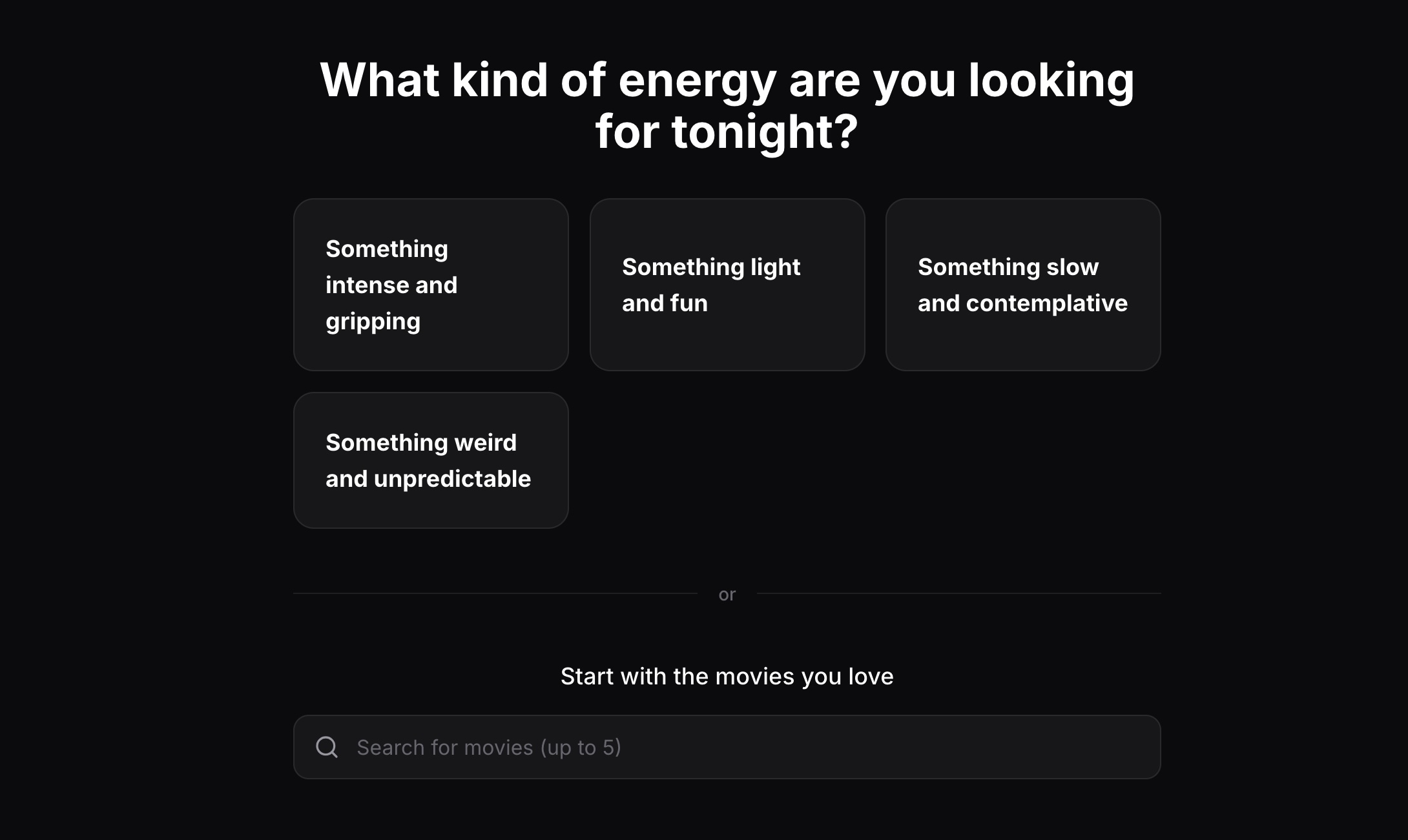

A typical agent session starts with one or two categorical questions to determine tonight's vibe. In the first question, the users can opt to not select an answer, and instead they can provide a list of movies as a starting point.

While the agent searches, it surfaces its thinking to the UI. Both to keep the UI interesting and to keep the users informed about its direction.

To add some visual flair, I decided to show posters of movies that were coming up during the agent's searches. Movie posters instill a more intuitive sense of what the agent is doing. I wanted to display an almost ethereal view of the agent browsing its memory where movies keep fading in and out as the agent is discovering them.

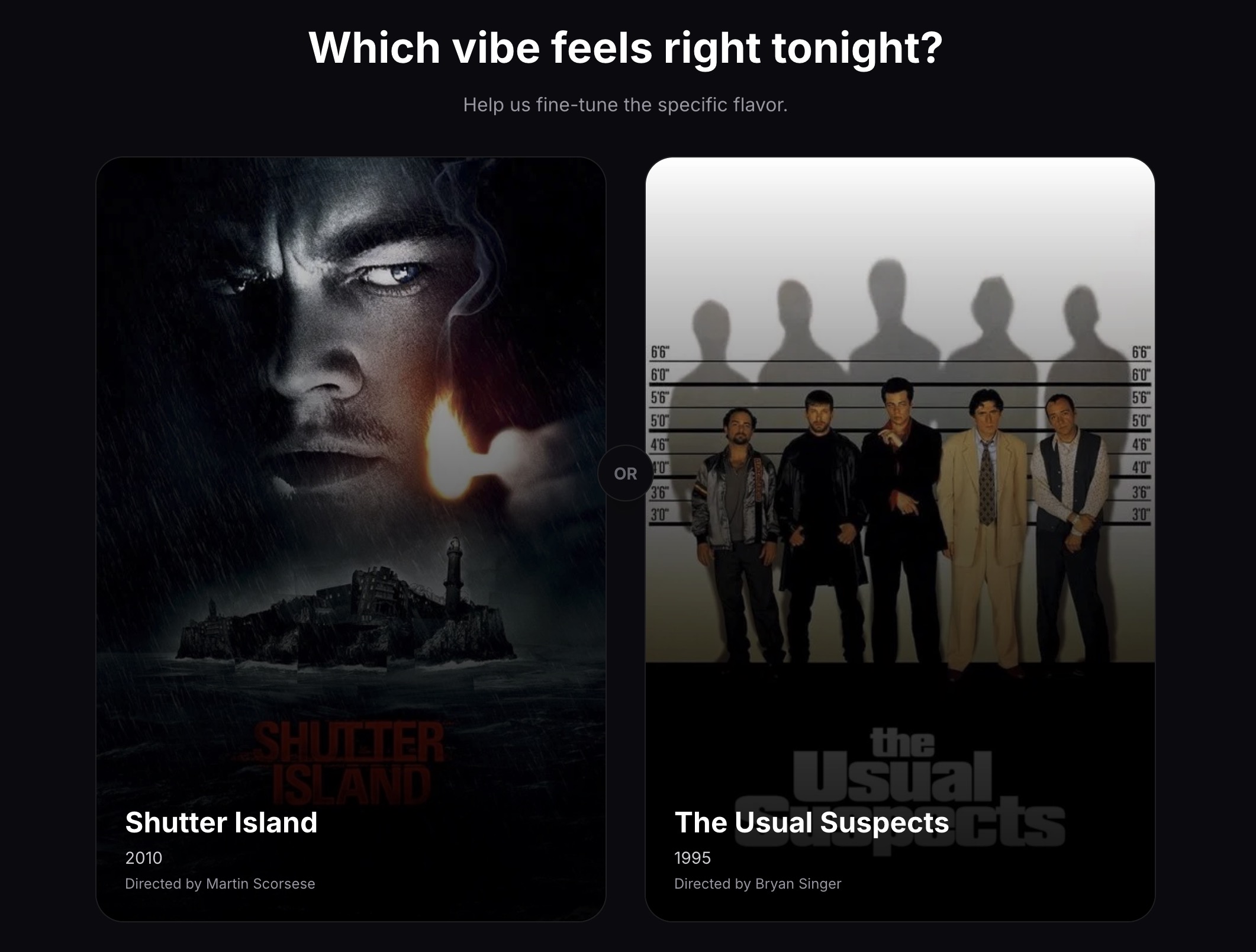

Next in the conversation, the agent usually presents a couple of movie duels that narrow down the search space. In a duel the users have to pick between two movies. The users might have seen both, just one, or none. By picking a specific movie, they are signaling to the agent more of this and less of the other movie. This gives the agent a much more concrete signal, and it can use it directly in embeddings search as we'll see later on.

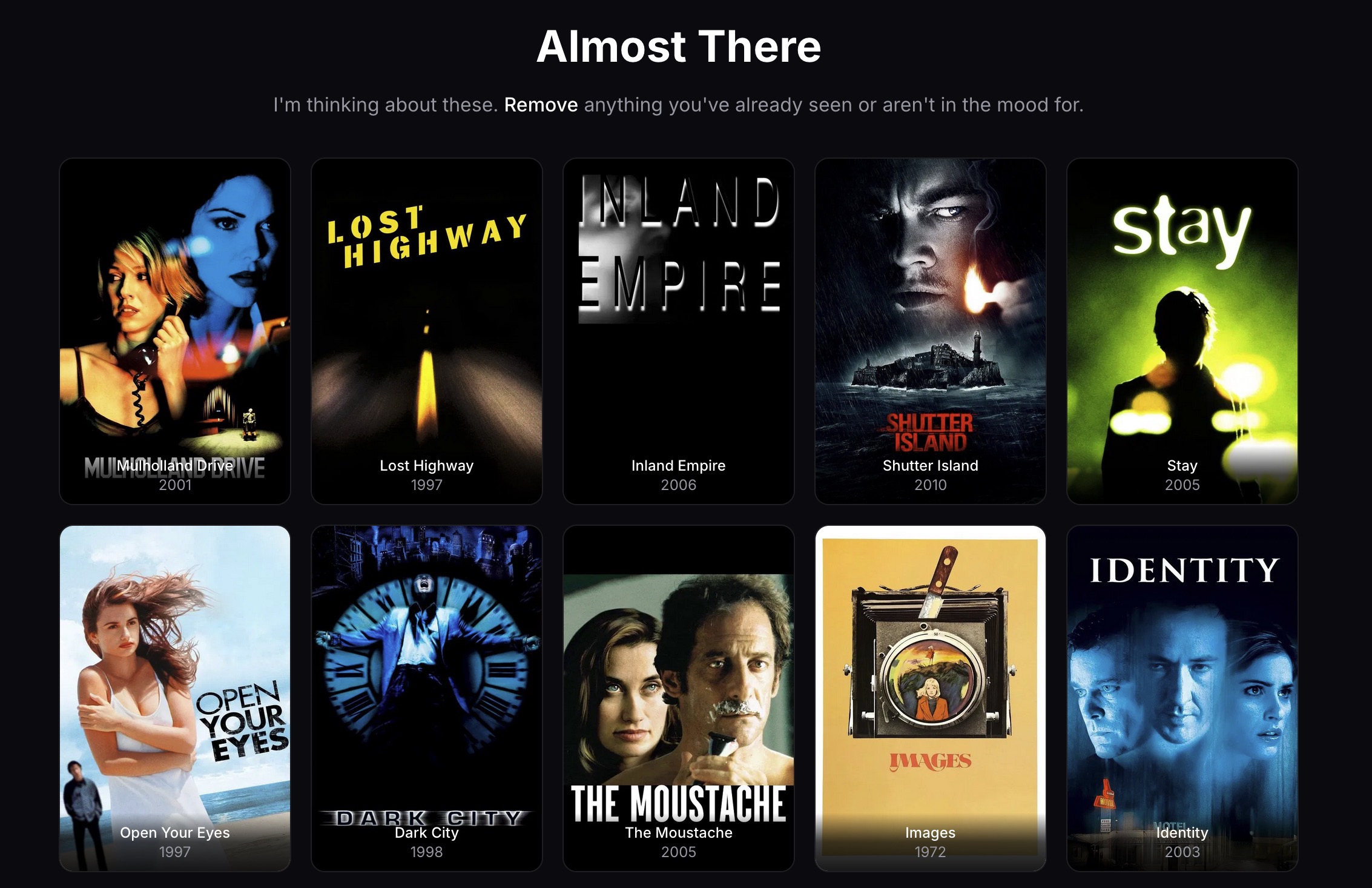

Before the final recommendations are given, I give the users one last option to intervene. Agent presents the user with 10-15 movie candidates that the agent is considering as recommendation options. Users can remove movies if they have already seen them or are just not in the mood for.

Finally, the agent crafts a personalized recommendation for each of the 3-5 movies based on the conversation and why it's the perfect pick for tonight.

I did not want to add a free-form text input field at any point in the conversation. A text input field has a certain amount of anxiety associated with it, because it requires you to eloquently explain what you want. That would grind the entire experience to a halt.

Meanwhile, picking the approximate best category or a movie in a duel requires much less mental toil. This way I keep the conversation moving at a much more rapid pace. Intuitively, it also makes it easier for the agent to reason from the constrained set of choices.

And the last, slightly selfish, reason to not include a text field is to curb any possible abuse. My Claude credits hang on by a thread as it is.

Agent Architecture

When I'm talking about the agent, I'm really talking about two agents working together. First, I have the main movie agent working as the orchestrator, and a separate search agent working as a sub-agent.

The movie agent is powered by Claude Sonnet 4.5. Its role is to drive the session and manage the conversation arc. It's ultimately responsible for delivering the recommendations to the user. It delegates searches as detailed plain-text tasks, and leaves them to the search agent to interpret and decompose.

Movie agent can use the following tools:

ask_categorical_question: Presents the user with a multiple-choice question (e.g., "What's the mood tonight?" with options like "Something light," "Edge-of-my-seat tension," "Make me cry"). Used early in the conversation to establish broad direction.ask_duel_question: Shows two movies side-by-side and asks the user to pick one. The choice signals preference direction without requiring the user to articulate why. Duel winners become concrete anchors for subsequent searches.search_movies_task: Delegates a natural language search task to the search agent. The movie agent describes what it's looking for in plain English; the search agent figures out how to find it.submit_candidates: Presents 10-15 candidate movies to the user for filtering. Users can veto films they've already seen or aren't in the mood for.submit_recommendations: Ends the session with 3-5 final recommendations, each accompanied by a personalized note explaining why this film fits tonight's mood.

The search agent, meanwhile, is powered by Haiku 4.5 which is fast, cheap, and good enough for search tasks in my experience.

Search agent can use the following tools:

embeddings_search: Queries the movie embedding space with a text description and optional positive/negative movie anchors. Supports filtering by year, genre, rating, and runtime. Returns up to 10 results per query.keyword_search: Looks up movies by exact metadata: director, actor, title fragments. Used sparingly when the task requires precise matching rather than semantic similarity.submit_search_results: Returns the aggregated results to the movie agent, formatted with semantic IDs and brief explanations of why each film matches the task.

Why not one monolithic agent? I found that a single agent struggles with the dual responsibility of managing the conversation flow and search. A monolithic agent would need to have all of the tools needed for asking questions and searching, and it has been established that more tools usually lead to worse overall agent performance.

It's also difficult to tailor a single system prompt for both of those tasks because they are so distinct. The context window would grow rapidly if I mix orchestration with numerous searches. By keeping them separate, I can make the search agent focus on the task at hand with a clean context window. Not to mention the cost savings because I can outsource the search tasks to a cheaper model. Accumulating steps across sessions, I've found that the search agent takes 10x more steps compared to the movie agent.

Search Agent

Designing the search agent was probably the most fun part of this project.

Why do we even need a search agent, why not just let the LLM recommend the movies directly? Well, the answer is in two parts:

- LLMs have knowledge cutoff date. The model companies have been getting increasingly better at releasing newer and newer versions, but still, the last ~6 months worth of movies are a black box to the models.

- LLMs are terrible at recommending diverse movies. Even with a high temperature movie recommendations seem to collapse into the same patterns.

By allowing the agent to search (or using RAG, if you're from the olden days) I inherently inject randomness into the context window, thus forcing the agent to reason about movies it found.

In my case, I am working with ~70k movies gathered from TMDB, and this project really wouldn't be possible without their API.

Side note about semantic IDs and LLMs: I found that LLMs are better at reliably referencing specific data if you give them human-readable IDs. I want both agents to be able to reference movies without having to use titles (which are hard to disambiguate) or UUIDs. So, I settled on using semantic ids in the form of {titleSlug}-{releaseYear}.

Keyword search is rarely used and only there as the definitive source of truth for the agent. For example, if the agent wanted to look up exact information about movies given some filters.

Embeddings Search

I spent most of my time extracting as much signal from embedding search as possible. I started with the simplest approach, which was embedding the TMDB metadata with Qwen3-Embedding-8B. Although, looking at the data, TMDB overviews did not capture much beyond a basic plot summary. There's no information about how the movie feels, how it looks, its intended audience, etc. We need to capture all of that, because most of the embeddings searches were looking for movies with a certain feeling not a specific plot point.

I decided to augment the movie data by generating additional descriptions of the movies to augment the TMDB data when generating embeddings. I wanted to capture the essence of a movie that will set it apart in the embedding space.

TMDB overview of The Dark Knight:

Batman raises the stakes in his war on crime. With the help of Lt. Jim Gordon and District Attorney Harvey Dent, Batman sets out to dismantle the remaining criminal organizations that plague the streets. The partnership proves to be effective, but they soon find themselves prey to a reign of chaos unleashed by a rising criminal mastermind known to the terrified citizens of Gotham as the Joker.

This is a perfectly fine description of the movie as far as plot goes. But it tells me nothing about the soul of the movie. Compare it to the generated essence of The Dark Knight:

A descent into moral vertigo wrapped in the sleek veneer of a crime epic, this film transcends superhero spectacle to probe the fragile architecture of civilized society when confronted by pure, theatrical chaos. [...] For viewers who crave intelligent blockbusters that respect their moral complexity—who want to be thrilled and unsettled simultaneously—this offers a rare fusion of visceral action and genuine ideas, leaving audiences exhilarated yet haunted by its portrait of what we become when pushed to our limits.

The generated text reeks of LLM-isms, em dashes, and other tell-tale signs that this has not been written by a human. But say what you will about the syntax, the semantics of the movie are captured pretty well. I'd never want to show this text to a human, but feeding it to an embedding model to generate a better representation worked like a charm.

It's important to note that I'm relying heavily on LLMs' internalized knowledge of these movies. After testing with a wide range of movies it was enough to provide only the basic TMDB metadata in the prompt, and the model was able to generate the essence of a movie even in the more obscure cases. There were some hallucinations as well, but since it was going to be processed by an embedding model I did not consider it as critical.

Due to the knowledge cutoff date, some of the movies in my dataset were not available during model training. For those movies (movies with release year >= 2025) I decided to include user reviews from the TMDB data.

If you recall, I mentioned that I was using movies from movie duels in embeddings search. I can augment the embedding query with specific positive or negative movie anchors using some simple vector arithmetic. That allows us to better navigate the world of representations.

Given a query embedding q, positive anchor embeddings P = {p_1, p_2, ...}, and negative anchor embeddings N = {n_1, n_2, ...}, the final search vector is computed as:

final = normalize(q + mean(P) - β * mean(N))

I settled on β = 0.5 after some experimentation. The asymmetry is intentional: positive signals from duel winners should pull harder than negative signals push. A user picking The Usual Suspects over Mad Max: Fury Road isn't saying they dislike action; they're saying they want its specific vibe more right now.

I did not opt for a vector database because the data is relatively tiny. Instead, I wrote a simple linear scan implemented in Go with pre-filtering capabilities. Pre-filtering is a key feature because I really want to narrow down the search space as much as possible, so that embedding search can be both faster and more precise. I'm sure most vector databases have this out of the box by now. But even if I had a million movies, the embeddings search will never be the bottleneck, and I can keep one dependency out of the system.

Tracing a Conversation

Let's trace through a real session to see the two agents collaborate. Early in the conversation, after I indicated I wanted something intense, the movie agent delegates its first search:

Search Task 1

Find intense and gripping films with high tension and propulsive pacing. Looking for thrillers, crime dramas, or psychological tension pieces that keep you on edge. Include variety in tone from gritty crime to cerebral suspense to action-driven intensity. Mix of eras welcome. Sort by quality and impact. Include wildcards that might surprise.

The movie agent describes what it's looking for in plain language and trusts the search agent to figure out how to find it. The search agent receives this task and decomposes it into six embedding queries, each probing a different region of movie-space:

- "propulsive, relentless pacing, mounting tension, breathless momentum, edge-of-seat intensity"

- "psychological thriller with paranoia, unreliable reality, claustrophobic dread, mind unraveling"

- "gritty crime drama, moral ambiguity, systemic corruption, procedural intensity, characters trapped in darkness"

- "tense cat-and-mouse game, hunter versus hunted, high stakes confrontation, psychological warfare"

- "contemporary prestige thriller, smart screenplay, moral complexity, sustained dread building to violence"

- "noir sensibility, shadowy underworld, morally compromised protagonist, ruthless pursuit, layered plot"

Notice how the search agent translates "intense and gripping" into multiple distinct interpretations: pacing-driven intensity, psychological dread, crime procedural tension, adversarial cat-and-mouse dynamics. A single query would collapse all of these into one muddled result set. Multiple queries let us explore the full territory.

The movie agent uses these results to construct a duel. I picked The Usual Suspects in the first duel and 10 Cloverfield Lane in the second one. Now the agent has concrete signal, not just intense, but specifically what kind of intense.

Search Task 2

Find psychological thrillers and mystery films with mind-bending twists, unreliable narration, claustrophobic tension, and narrative puzzles. Similar to The Usual Suspects (

the-usual-suspects-1995) and 10 Cloverfield Lane (10-cloverfield-lane-2016) in their use of paranoia, confined spaces, and shifting reality. Looking for films that keep you guessing, with reveals that reframe everything. Include variety from different eras. Avoid straightforward action.

The search agent recognizes movies I chose as anchor references and uses them to bias the embedding search:

- "paranoid psychological thriller unreliable narrator shifting reality mounting dread"

- positive anchors: The Usual Suspects (1995), 10 Cloverfield Lane (2016)

- "narrative twist reveals reframe entire story hidden truth puzzle structure shocking denouement"

- positive anchors: The Usual Suspects (1995), 10 Cloverfield Lane (2016)

- "confined spaces isolation claustrophobic psychological unraveling mind games pressure cooker"

- "reality slipping fractured perception protagonist cannot be trusted memory unreliable mystery"

The search pulls results toward films that share embedding-space DNA with my duel choices. The query text provides the thematic direction and the anchors provide the taste calibration. This is how a vague preference like "something intense" sharpens into "specifically this flavor of paranoid, twist-driven intensity" over the course of a conversation.

Automating Vibe Checks

During the development of the agent I played with it enough to know it could recommend decent movies for me and my wife in many different scenarios. But I didn't know if that would really generalize to different types of users.

Thankfully, I had a whole batch of synthetic movie personas I could scavenge from another movie-related project I did (topic for a different blog post). Each movie persona is constructed from a base persona taken from PersonaHub dataset, movie dimensions, and a synthetic description of their movie preferences. An example of a persona:

Base persona:

A visual artist from a different country who brings a unique perspective and artistic style to the events

Movie dimensions:

Who prefers plot-driven films

Movie preferences:

I come to cinema for what literature has always provided: a well-constructed narrative that reveals something true about human nature or society. [...]

Plot drives my attention first, always, though I notice when cinematography and design work in harmony with the story being told. I track how events connect, how information is revealed or withheld, how the machinery of the narrative operates [...]

To evaluate the movie agent I had to build yet another agent to emulate responses by a given persona. The evaluation was implemented as follows:

- Sample one or more personas from the dataset

- Initialize the appropriate number of agents for each persona

- Start the movie agent which emits the questions during the session

- Feed each question to persona agents, and wait for their response

- Feed the answers back to the movie agent

- Repeat until we get the final recommendations

The final evaluation score was not terribly scientific. I manually inspected all of the agent sessions, and tried to evaluate the recommendations based on my own knowledge of movies. Often times, the recommendations would come out with something unexpected, so I had to expand my movie universe quite a bit.

I added automated LLM judge by employing a strong model as a judge (e.g. Opus 4.5). I asked the judge to rate the recommendations on a five point scale (terrible, bad, meh, great, perfect) given the personas, and the entire conversation transcript. Initially, the judge deemed all of the recommendations as terrible or bad, because they did not fit the personas in an exact sense. So, I had to additionally prompt the evaluator that there is an information asymmetry - the movie agent does not have access to the full persona, and it can only give recommendations based on the answers. The judge was able to take that into account and the scores improved to mostly great (rarely perfect, though).

Next Steps

The great thing about movies is that there is always more. Which is nice as a viewer, but not so nice if you're trying to keep a movie recommendation service up to date. Most of my data framework assumes a single data import. I'll need to refactor some of those assumptions, and hopefully automate the process of adding new movies:

- Figure out which movies were released

- Generate the essence for each new movie

- Embed the movies

- Add movies to the database, embedding index, and keyword search index